WEBサイト制作・アプリ開発・システム開発・ブランディングデザイン制作に関するご相談はお気軽にご連絡ください。

構想段階からじっくりとヒアリングし、お客様の課題にあわせたアプローチ手法でお客様の“欲しかった”をカタチにしてご提案いたします。

Blog スタッフブログ

iOS

Swift

システム開発

[Swift]iTunesライブラリの曲から音量の波形を取得しUIImageで表示

こんにちは、株式会社MIXシステム開発担当のBloomです。

早速本題のiTunesライブラリの曲から波形を取得しUIImageで表示する手順について、

お仕事の中で得た知見を共有させていただきたいと思います。

ライブラリから楽曲を取得

まずは楽曲をライブラリから取得します。今回はMPMediaPickerを利用します。

extension ViewController: MPMediaPickerControllerDelegate {

// storyboardから接続してください

@IBAction func getAudio(_ sender: Any?) {

let picker = MPMediaPickerController()

picker.delegate = self

picker.allowsPickingMultipleItems = false

picker.showsItemsWithProtectedAssets = false

picker.showsCloudItems = false

present(picker, animated: true, completion: nil)

}

func mediaPicker(_ mediaPicker: MPMediaPickerController,

didPickMediaItems mediaItemCollection: MPMediaItemCollection) {

dismiss(animated: true, completion: nil)

let items = mediaItemCollection.items

if items.isEmpty { return }

let item = items.first

if let url = item?.assetURL {

let avUrlAsset = AVURLAsset(url: url)

DispatchQueue.main.async {

self.loadImage(url: avUrlAsset)

}

}

}

func loadImage(url: AVURLAsset) {

Task {

let image = await self.renderImageAudioPictogramForAsset(asset: url)

DispatchQueue.main.async {

// imageViewもstoryboardで配置しています

self.imageView.image = image

}

}

}

}AVURLAssetsから楽曲データを読み込み

取得したAVURLAssetsからデータを読み込みましょう。音量を配列化することが今回の目標です。

func renderImageAudioPictogramForAsset(asset: AVURLAsset) async -> UIImage? {

do {

let reader = try AVAssetReader(asset: asset)

guard let track = asset.tracks.first else { return nil }

let outputSettings =

[AVFormatIDKey: NSNumber(value: kAudioFormatLinearPCM),

AVLinearPCMBitDepthKey: NSNumber(value: 16),

AVLinearPCMIsBigEndianKey: NSNumber(booleanLiteral: false),

AVLinearPCMIsFloatKey: NSNumber(booleanLiteral: false),

AVLinearPCMIsNonInterleaved: NSNumber(booleanLiteral: false)

]

let output = AVAssetReaderTrackOutput(track: track, outputSettings: outputSettings)

reader.add(output)

var sampleRate: Double = 44100

var channelCount: UInt32 = 2

let formatDescriptions = try await track.load(.formatDescriptions)

// 楽曲詳細データからチャンネル数を取得 このサンプルのチャンネル数は1か2の想定です

for description in formatDescriptions {

if let format = CMAudioFormatDescriptionGetStreamBasicDescription(description) {

sampleRate = format.pointee.mSampleRate

channelCount = format.pointee.mChannelsPerFrame

}

}

let bytesPerSample = 2 * channelCount

var normalizeMax = 0

var fullSongData: [Int16] = []

reader.startReading()

var totalBytes: UInt64 = 0

var totalLeft: Int = 0

var totalRight: Int = 0

var sampleTally: Int = 0

var samplesPerPixel = sampleRate / 50

var data: NSMutableData?

while (reader.status == .reading) {

guard let trackOutput = reader.outputs.first else { continue }

if let sampleBufferRef = trackOutput.copyNextSampleBuffer(),

let blockBufferRef = CMSampleBufferGetDataBuffer(sampleBufferRef) {

let length = CMBlockBufferGetDataLength(blockBufferRef)

totalBytes += UInt64(length)

data = NSMutableData(length: length)

let samples = data!.mutableBytes.assumingMemoryBound(to: Int16.self)

CMBlockBufferCopyDataBytes(blockBufferRef, atOffset: 0, dataLength: length, destination: samples)

let sampleCount = length / Int(bytesPerSample)

for i in 0..<sampleCount {

var left: Int16

var right: Int16

if channelCount == 2 {

left = samples[i*2]

totalLeft += Int(left)

right = samples[i*2 + 1]

totalRight += Int(right)

}

else {

left = samples[i]

totalLeft += Int(left)

}

sampleTally += 1

if sampleTally > Int(samplesPerPixel) {

left = Int16(totalLeft / sampleTally)

let fix = abs(left)

if (fix > normalizeMax) {

normalizeMax = Int(fix)

}

fullSongData.append(left)

if (channelCount==2) {

right = Int16(totalRight / sampleTally);

let fix = abs(right)

if (fix > normalizeMax) {

normalizeMax = Int(fix);

}

fullSongData.append(right)

}

totalLeft = 0

totalRight = 0

sampleTally = 0

}

}

CMSampleBufferInvalidate(sampleBufferRef)

}

}

var finalImage: UIImage?

if (reader.status == .failed || reader.status == .unknown){

return nil

}

if (reader.status == .completed){

finalImage = self.audioImageGraph(data: fullSongData,

normalizeMax: Int16(normalizeMax),

channelCount: Int(channelCount),

imageHeight: 200)

}

return finalImage

}

catch let error {

print(error)

}

return nil

}データを波形化

音量を配列化できたので後は画像に起こすだけです。CGContextで描画します。

func audioImageGraph(data: [Int16], normalizeMax: Int16, channelCount: Int, imageHeight: Double) -> UIImage? {

// 1単位1px

let imageSize = CGSizeMake(CGFloat(data.count / channelCount), imageHeight)

UIGraphicsBeginImageContext(imageSize)

let context = UIGraphicsGetCurrentContext()!

context.setFillColor(UIColor.white.cgColor)

context.setAlpha(1.0)

let rect = CGRect(origin: .zero, size: imageSize)

let leftColor = UIColor.black.cgColor

let rightColor = UIColor.red.cgColor

context.fill(rect)

context.setLineWidth(1.0)

let center = imageHeight / 2

let sampleAdjustmentFactor = imageHeight / Double(normalizeMax)

let samples = data

for intSample in 0..<samples.count / channelCount {

if channelCount == 2 {

let left = samples[intSample * 2]

var pixels = Double(left)

pixels *= sampleAdjustmentFactor

context.move(to: CGPoint(x: CGFloat(intSample), y: center - pixels))

context.addLine(to: CGPoint(x: CGFloat(intSample), y: center + pixels))

context.setStrokeColor(leftColor)

context.strokePath()

// 2つ目のチャンネルも画像化したいならここで処理

let right = samples[intSample * 2 + 1]

}

else {

let left = samples[intSample]

var pixels = Double(left)

pixels *= sampleAdjustmentFactor

context.move(to: CGPoint(x: CGFloat(intSample), y: center - pixels))

context.addLine(to: CGPoint(x: CGFloat(intSample), y: center + pixels))

context.setStrokeColor(leftColor)

context.strokePath()

}

}

let newImage = UIGraphicsGetImageFromCurrentImageContext()

UIGraphicsEndImageContext()

return newImage

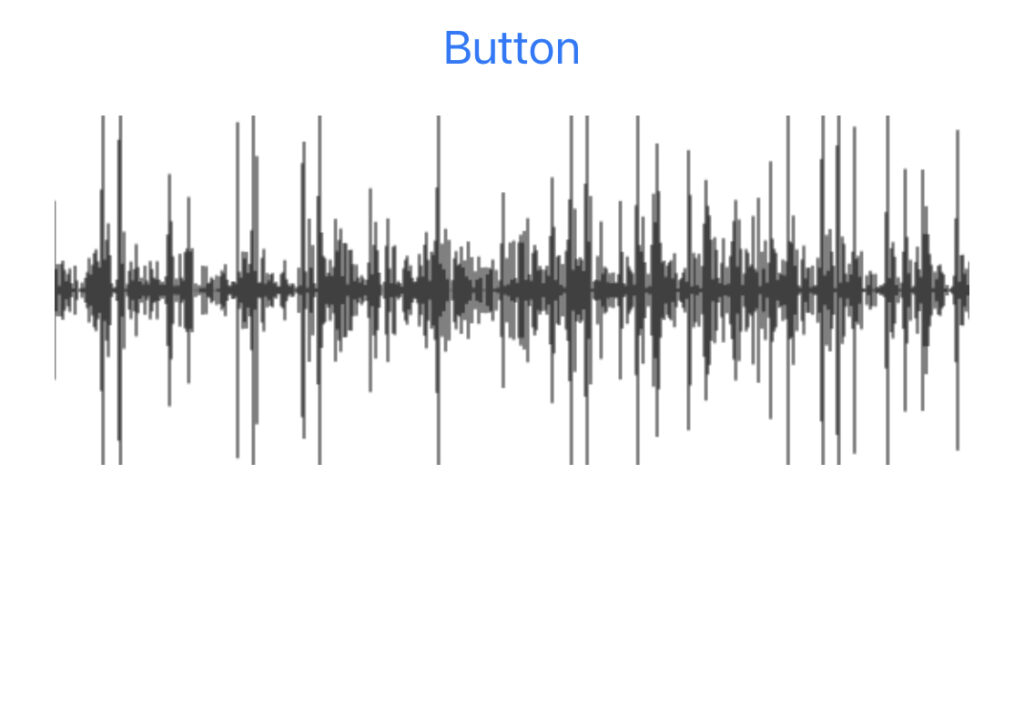

}実行結果

画面に収めるためにAspectFitで無理やり表示しています。実際に利用する場合はスクロール表示などしましょう。

これで簡単に楽曲の波形が表示できました。良かったですね。